strategic framework

Autonomous Agent Security Guidelines (AASG)

Overview

Overview

Overview

As organizations deploy autonomous agents (agents that perceive, decide, act) at scale, new risks are emerging — many outside traditional application/security models.

“AASG” is shorthand for the set of guiding controls, architectures and life-cycle processes needed to secure agentic systems.

It isn’t a single ISO/IEC standard yet, but rather a convergence of:

Academic protocols (e.g., “SAGA: A Security Architecture for Governing Agentic Systems”)

Industry practitioner playbooks

Regulatory/standards tailwinds (e.g., EU AI Act, ISO/IEC 42001)

So you can treat “AASG” as a reference architecture + set of controls for secure agentic operation.

As organizations deploy autonomous agents (agents that perceive, decide, act) at scale, new risks are emerging — many outside traditional application/security models.

“AASG” is shorthand for the set of guiding controls, architectures and life-cycle processes needed to secure agentic systems.

It isn’t a single ISO/IEC standard yet, but rather a convergence of:

Academic protocols (e.g., “SAGA: A Security Architecture for Governing Agentic Systems”)

Industry practitioner playbooks

Regulatory/standards tailwinds (e.g., EU AI Act, ISO/IEC 42001)

So you can treat “AASG” as a reference architecture + set of controls for secure agentic operation.

As organizations deploy autonomous agents (agents that perceive, decide, act) at scale, new risks are emerging — many outside traditional application/security models.

“AASG” is shorthand for the set of guiding controls, architectures and life-cycle processes needed to secure agentic systems.

It isn’t a single ISO/IEC standard yet, but rather a convergence of:

Academic protocols (e.g., “SAGA: A Security Architecture for Governing Agentic Systems”)

Industry practitioner playbooks

Regulatory/standards tailwinds (e.g., EU AI Act, ISO/IEC 42001)

So you can treat “AASG” as a reference architecture + set of controls for secure agentic operation.

Why this is so timely in 2026

Autonomous agent deployments are proliferating rapidly.

Traditional security/auth models fail when agents act autonomously (not humans).

Regulators are beginning to specifically mention autonomous systems (not just “AI models”).

If you don’t adopt such guidelines, risks include data exfiltration, agent impersonation, regulatory failure, audit violations.

Why this is so timely in 2026

Autonomous agent deployments are proliferating rapidly.

Traditional security/auth models fail when agents act autonomously (not humans).

Regulators are beginning to specifically mention autonomous systems (not just “AI models”).

If you don’t adopt such guidelines, risks include data exfiltration, agent impersonation, regulatory failure, audit violations.

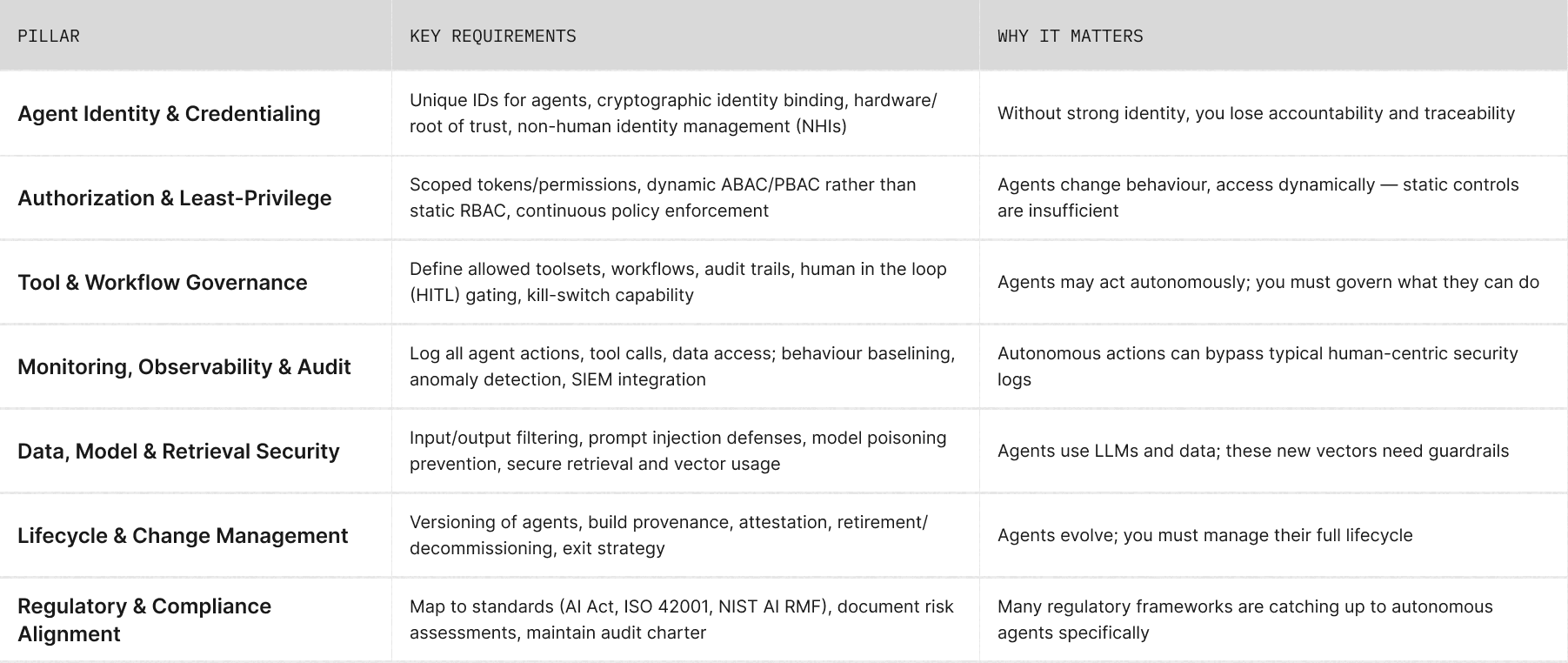

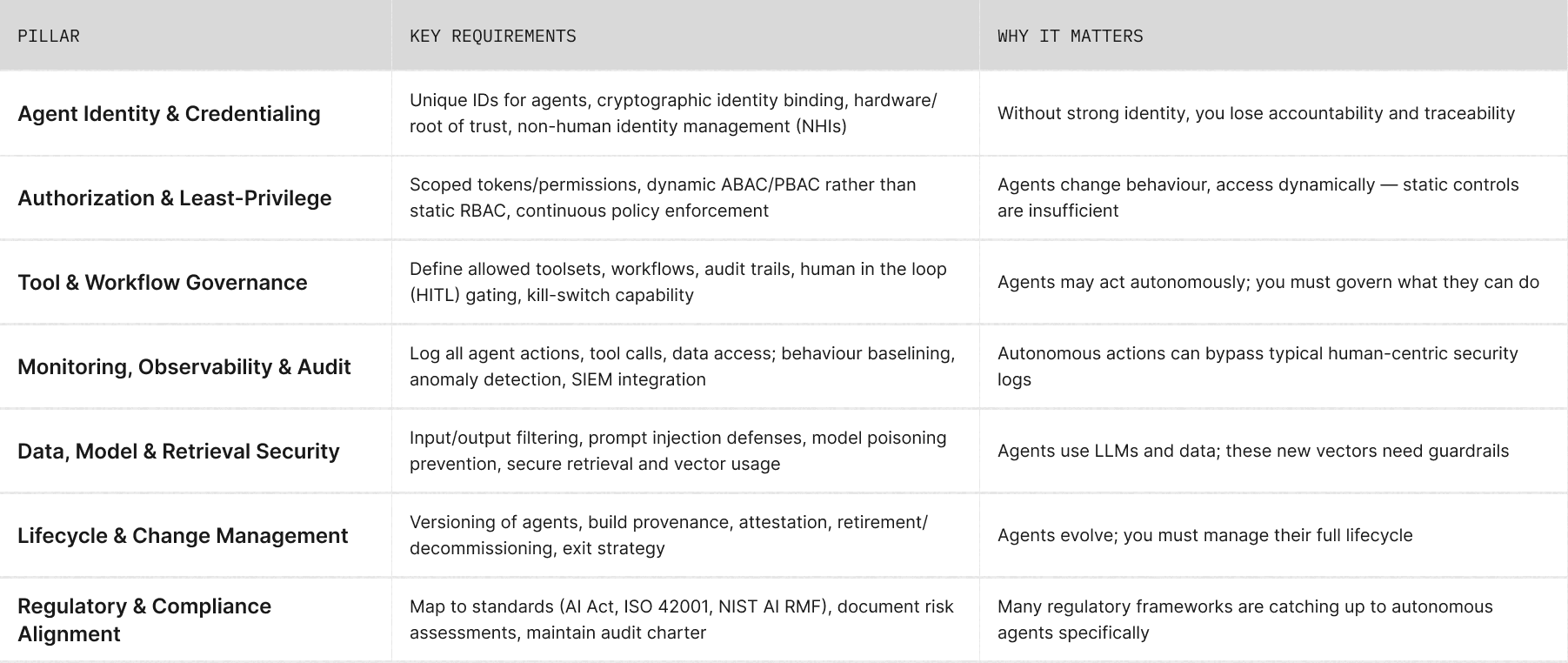

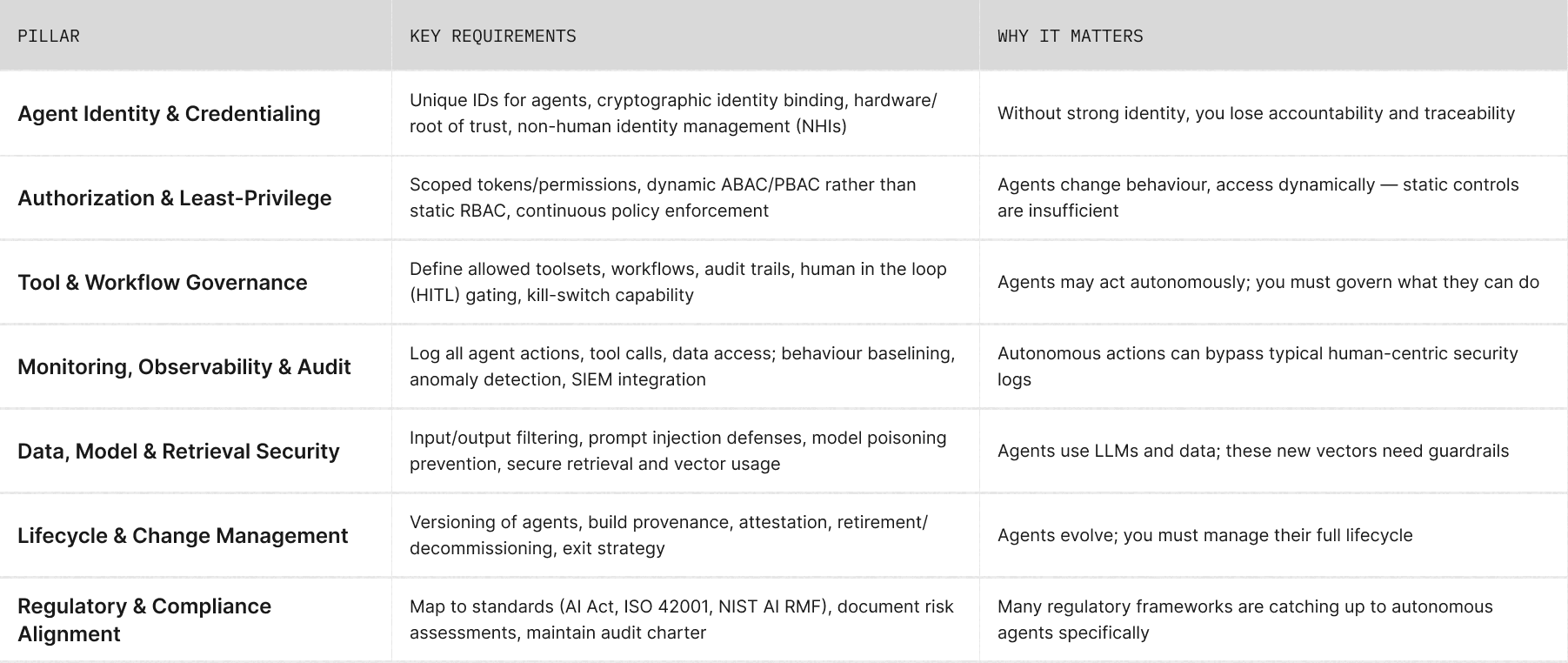

Key dimensions of the emerging AASG

Key dimensions of the emerging AASG

Key dimensions of the emerging AASG

Here are the major pillars that recur across research, industry articles, and emerging protocols:

Here are the major pillars that recur across research, industry articles, and emerging protocols:

Agent Registry / Inventory

Maintain a live catalogue of all agents operating in the system.

Each record should include:

Agent ID (unique identifier)

Owner / responsible team

Purpose / mission scope

Data and tool access domains

Version / build hash

Risk rating and current trust status

Why: A registry ensures accountability, discoverability, and auditability — key for both internal security and regulatory reporting.

Identity & Tokenization

Assign each agent a cryptographically verifiable identity — distinct from human or service user accounts.

Use workload identities (mTLS, SPIFFE, DID, etc.)

Issue short-lived, scoped tokens tied to specific APIs or datasets

Store private keys or signing material in HSM/KMS, never in plain config

Rotate identities automatically and revoke on anomaly or decommission

Why: Agent identity must be independently provable to support zero-trust operations and non-repudiation of actions.

Authorization & Policy Enforcement

Move beyond static roles. Implement dynamic authorization using:

Attribute-Based Access Control (ABAC) or Policy-Based Access Control (PBAC)

Policies evaluating context (dataset classification, time, risk state, task type)

Centralized policy engines (e.g., OPA, Cedar)

Example: Agent may read “TrialData” only if approved for project X, within research phase, and from trusted environment.

Why: Agents act dynamically; authorization must evolve with context.

Data and Model Safeguards

Define strict rules for what data and models each agent can access or modify:

Approved model and dataset lists per risk tier

Controlled retrieval layers (e.g., gated vector store access)

Prompt injection & output filtering for LLM components

Monitoring for state drift or unusual tool invocation patterns

Why: Agents manipulate sensitive data and language models — prevent unintentional data leakage or corrupted model behaviour.

Observability, Audit, and Traceability

Log every significant event:

Which agent acted, what it did, which data/tool it touched, and the outcome

Store logs with cryptographic integrity (e.g., WORM or blockchain-style attestations)

Integrate with SIEM, data observability, and AIOps pipelines

Enable behavioural baselining and anomaly detection

Why: Transparent traceability allows you to prove compliance, detect abuse, and evaluate model/agent reliability over time.

Human Oversight and Kill Switches

Define boundaries of autonomy and escalation rules:

Human-in-loop (HITL) for high-risk or irreversible actions (publishing, data export, external communications)

Kill switch / emergency stop for runaway or compromised agents

Policy-based throttling or cooldowns for unsafe behaviour

Why: Even advanced agents require containment; humans retain ultimate authority.

Lifecycle Management and Provenance

Treat agents like software artifacts:

Version every build (image digest, policy hash, dataset signature)

Attest build provenance — who built, approved, and deployed it

Revoke tokens and archive logs upon retirement

Periodically re-validate aging agents against current compliance rules

Why: Without lifecycle discipline, dormant or outdated agents become unmonitored security liabilities.

Regulatory and Ethical Alignment

Map your security controls to relevant standards:

General: ISO/IEC 27001, 42001, NIST AI RMF

Sectoral: HIPAA, GDPR, GxP, GLP, FINMA, MiFID II, or local equivalents

Research ethics: transparency, consent, reproducibility, data minimization

Maintain documentation for audits and AI governance boards.

Why: Agent systems must meet both technical security and ethical or legal accountability standards.

Optional Additions for Mature Platforms

Federated Trust Frameworks — enable secure agent collaboration across institutions or clouds (via verifiable credentials, DIDs).

Behavioral Sandboxing — run new or experimental agents in constrained execution environments before granting full permissions.

Continuous Risk Scoring — dynamically assess each agent’s operational trust based on recent behaviour, model drift, or policy violations.

Agent Registry / Inventory

Maintain a live catalogue of all agents operating in the system.

Each record should include:

Agent ID (unique identifier)

Owner / responsible team

Purpose / mission scope

Data and tool access domains

Version / build hash

Risk rating and current trust status

Why: A registry ensures accountability, discoverability, and auditability — key for both internal security and regulatory reporting.

Identity & Tokenization

Assign each agent a cryptographically verifiable identity — distinct from human or service user accounts.

Use workload identities (mTLS, SPIFFE, DID, etc.)

Issue short-lived, scoped tokens tied to specific APIs or datasets

Store private keys or signing material in HSM/KMS, never in plain config

Rotate identities automatically and revoke on anomaly or decommission

Why: Agent identity must be independently provable to support zero-trust operations and non-repudiation of actions.

Authorization & Policy Enforcement

Move beyond static roles. Implement dynamic authorization using:

Attribute-Based Access Control (ABAC) or Policy-Based Access Control (PBAC)

Policies evaluating context (dataset classification, time, risk state, task type)

Centralized policy engines (e.g., OPA, Cedar)

Example: Agent may read “TrialData” only if approved for project X, within research phase, and from trusted environment.

Why: Agents act dynamically; authorization must evolve with context.

Data and Model Safeguards

Define strict rules for what data and models each agent can access or modify:

Approved model and dataset lists per risk tier

Controlled retrieval layers (e.g., gated vector store access)

Prompt injection & output filtering for LLM components

Monitoring for state drift or unusual tool invocation patterns

Why: Agents manipulate sensitive data and language models — prevent unintentional data leakage or corrupted model behaviour.

Observability, Audit, and Traceability

Log every significant event:

Which agent acted, what it did, which data/tool it touched, and the outcome

Store logs with cryptographic integrity (e.g., WORM or blockchain-style attestations)

Integrate with SIEM, data observability, and AIOps pipelines

Enable behavioural baselining and anomaly detection

Why: Transparent traceability allows you to prove compliance, detect abuse, and evaluate model/agent reliability over time.

Human Oversight and Kill Switches

Define boundaries of autonomy and escalation rules:

Human-in-loop (HITL) for high-risk or irreversible actions (publishing, data export, external communications)

Kill switch / emergency stop for runaway or compromised agents

Policy-based throttling or cooldowns for unsafe behaviour

Why: Even advanced agents require containment; humans retain ultimate authority.

Lifecycle Management and Provenance

Treat agents like software artifacts:

Version every build (image digest, policy hash, dataset signature)

Attest build provenance — who built, approved, and deployed it

Revoke tokens and archive logs upon retirement

Periodically re-validate aging agents against current compliance rules

Why: Without lifecycle discipline, dormant or outdated agents become unmonitored security liabilities.

Regulatory and Ethical Alignment

Map your security controls to relevant standards:

General: ISO/IEC 27001, 42001, NIST AI RMF

Sectoral: HIPAA, GDPR, GxP, GLP, FINMA, MiFID II, or local equivalents

Research ethics: transparency, consent, reproducibility, data minimization

Maintain documentation for audits and AI governance boards.

Why: Agent systems must meet both technical security and ethical or legal accountability standards.

Optional Additions for Mature Platforms

Federated Trust Frameworks — enable secure agent collaboration across institutions or clouds (via verifiable credentials, DIDs).

Behavioral Sandboxing — run new or experimental agents in constrained execution environments before granting full permissions.

Continuous Risk Scoring — dynamically assess each agent’s operational trust based on recent behaviour, model drift, or policy violations.

Agent Registry / Inventory

Maintain a live catalogue of all agents operating in the system.

Each record should include:

Agent ID (unique identifier)

Owner / responsible team

Purpose / mission scope

Data and tool access domains

Version / build hash

Risk rating and current trust status

Why: A registry ensures accountability, discoverability, and auditability — key for both internal security and regulatory reporting.

Identity & Tokenization

Assign each agent a cryptographically verifiable identity — distinct from human or service user accounts.

Use workload identities (mTLS, SPIFFE, DID, etc.)

Issue short-lived, scoped tokens tied to specific APIs or datasets

Store private keys or signing material in HSM/KMS, never in plain config

Rotate identities automatically and revoke on anomaly or decommission

Why: Agent identity must be independently provable to support zero-trust operations and non-repudiation of actions.

Authorization & Policy Enforcement

Move beyond static roles. Implement dynamic authorization using:

Attribute-Based Access Control (ABAC) or Policy-Based Access Control (PBAC)

Policies evaluating context (dataset classification, time, risk state, task type)

Centralized policy engines (e.g., OPA, Cedar)

Example: Agent may read “TrialData” only if approved for project X, within research phase, and from trusted environment.

Why: Agents act dynamically; authorization must evolve with context.

Data and Model Safeguards

Define strict rules for what data and models each agent can access or modify:

Approved model and dataset lists per risk tier

Controlled retrieval layers (e.g., gated vector store access)

Prompt injection & output filtering for LLM components

Monitoring for state drift or unusual tool invocation patterns

Why: Agents manipulate sensitive data and language models — prevent unintentional data leakage or corrupted model behaviour.

Observability, Audit, and Traceability

Log every significant event:

Which agent acted, what it did, which data/tool it touched, and the outcome

Store logs with cryptographic integrity (e.g., WORM or blockchain-style attestations)

Integrate with SIEM, data observability, and AIOps pipelines

Enable behavioural baselining and anomaly detection

Why: Transparent traceability allows you to prove compliance, detect abuse, and evaluate model/agent reliability over time.

Human Oversight and Kill Switches

Define boundaries of autonomy and escalation rules:

Human-in-loop (HITL) for high-risk or irreversible actions (publishing, data export, external communications)

Kill switch / emergency stop for runaway or compromised agents

Policy-based throttling or cooldowns for unsafe behaviour

Why: Even advanced agents require containment; humans retain ultimate authority.

Lifecycle Management and Provenance

Treat agents like software artifacts:

Version every build (image digest, policy hash, dataset signature)

Attest build provenance — who built, approved, and deployed it

Revoke tokens and archive logs upon retirement

Periodically re-validate aging agents against current compliance rules

Why: Without lifecycle discipline, dormant or outdated agents become unmonitored security liabilities.

Regulatory and Ethical Alignment

Map your security controls to relevant standards:

General: ISO/IEC 27001, 42001, NIST AI RMF

Sectoral: HIPAA, GDPR, GxP, GLP, FINMA, MiFID II, or local equivalents

Research ethics: transparency, consent, reproducibility, data minimization

Maintain documentation for audits and AI governance boards.

Why: Agent systems must meet both technical security and ethical or legal accountability standards.

Optional Additions for Mature Platforms

Federated Trust Frameworks — enable secure agent collaboration across institutions or clouds (via verifiable credentials, DIDs).

Behavioral Sandboxing — run new or experimental agents in constrained execution environments before granting full permissions.

Continuous Risk Scoring — dynamically assess each agent’s operational trust based on recent behaviour, model drift, or policy violations.

Featured AEA projects

More projects

More projects

Ready to start?

Get in touch

Whether you have questions or just want to explore options, I'm here.

Ready to start?

Get in touch

Whether you have questions or just want to explore options, I'm here.

Ready to start?

Get in touch

Whether you have questions or just want to explore options, I'm here.